Abstract

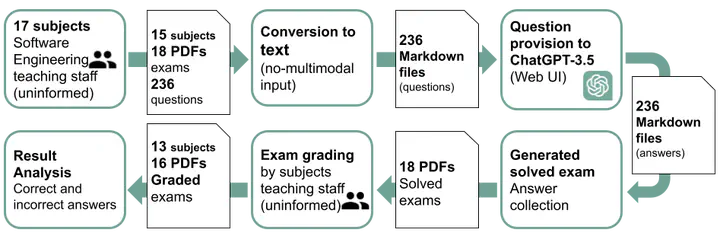

The appearance of ChatGPT at the end of 2022 was a milestone in the field of Generative Artificial Intelligence. However, it also caused a shock in the academic world. For the first time, a simple interface allowed anyone to access a large language model and use it to generate text. These capabilities have a relevant impact on teaching-learning methodologies and assessment methods. This work aims to obtain an objective measure of ChatGPT’s possible performance in solving exams related to computer engineering. For this purpose, it has been tested with actual exams of 15 subjects of the Software Engineering branch of a Spanish university. All the questions of these exams have been extracted and adapted to a text format to obtain an answer. Furthermore, the exams have been rewritten to be corrected by the teaching staff. In light of the results, ChatGPT can achieve relevant performance in these exams; it can pass many questions and problems of different natures in multiple subjects. A detailed study of the results by typology of questions and problems is provided as a fundamental contribution, allowing recommendations to be considered in the design of assessment methods. In addition, an analysis of the impact of the non-deterministic aspect of ChatGPT on the answers to test questions is presented, and the need to use a strategy to reduce this effect for performance analysis is concluded.